In a previous article in the Newsletter, “The Post Office Horizon scandal: the law says computers are reliable”, I set out an outline of the facts leading up to the second trial against the Post Office by Justice for Subpostmasters Alliance set up by Sir Alan Bates, recently and deservedly appointed a Knight Batchelor in […]

Read More

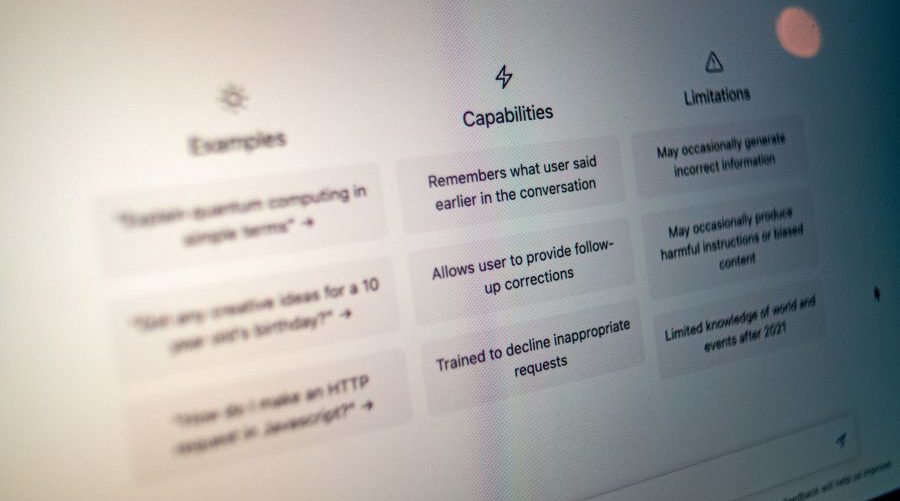

EU AI Act passed The European Parliament approved the Artificial Intelligence Act (EU AI Act) on 13 March 2024. It has been hailed as the world’s first comprehensive and binding piece of legislation on AI, although many of its provisions won’t be enforced for at least a year or two. Rather than attempting to regulate specific technologies, […]

Read More

Since ChatGPT was released to the public in November 2022, countless articles have been written about how generative artificial intelligence (GenAI) will improve the efficiency of white collar workers, including legal professionals, and perhaps eventually lead to job losses. Ironically, it’s the very people writing about the revolutionary potential of this technology who have been […]

Read More

The idea that the law should be freely accessible to all the people is nothing new, but it is technology that has enabled that aspiration to be realised. ICLR has taken advantage of that to provide, alongside its reported case law subscription service, a freely accessible version of both unreported judgments and legislation. Recent developments […]

Read More

After a long time in the making, the Online Safety Act finally received Royal Assent on 26 October 2023. According to the accompanying Government press release, the Act “places legal responsibility on tech companies to prevent and rapidly remove illegal content” and aims “to stop children seeing material that is harmful to them”. So what […]

Read More

The SCL AI Group have released their Artificial Intelligence Contractual Clauses document which is free to access and share under a Creative Commons Licence. The development and use of AI will increase significantly over the next few years. AI systems will therefore increasingly become the subject matter of transactional contracts. AI technologies create new and unique risks which will […]

Read More

As reported here, in April 2022 The National Archives launched its Find Case Law service, and 6 months on John Sheridan of TNA described the progress that had been made. Meanwhile, ICLR systematically monitored the publication of listed cases by TNA over its first 12 months of operation. The resulting report, Publication of listed judgments: […]

Read More

It’s been almost a year since ChatGPT was released to the public back in November 2022. Although much has been written about the impact of generative AI on the legal sector as a whole, there has been less focus on its potential to improve access to justice for the ordinary citizen who cannot afford a […]

Read More

In April 2023, legal tech disruptors vLex and Fastcase merged to form the world’s largest global law library, in what has been described as one of the most consequential mergers in the history of legal tech. The teams have now fully integrated, and over one billion legal documents are accessible on vLex’s AI-powered legal research […]

Read More

In the wake of an avalanche of publicity following the hugely successful roll-out of ChatGPT, governments around the world have been waking up to the transformative effects of generative AI tools upon their societies, economies and legal systems. Stark warnings from leading industry figures such as Sam Altman, Elon Musk and Geoffrey Hinton, about the […]

Read More

Following his recent article on ChatGPT’s implications for the legal world, Alex Heshmaty garners answers to further questions from Dr Ilia Kolochenko. Who owns the copyright of ChatGPT responses? This now-rapidly evolving question is largely unsettled among jurisdictions, in most cases probably no-one. Is it possible for original copyright holders to prevent ChatGPT (or Bard) […]

Read More

The Government recently published a White Paper, “A pro-innovation approach to AI regulation,” setting out its proposals for AI regulation, in conjunction with an impact assessment and consultation paper. Jo Frears, IP & Technology Leader at Lionshead Law, considers some of the key points. The meaning of “AI” The White Paper acknowledges that there is […]

Read Moreinfolaw Limited 5 Coval Passage London SW14 7RE Registered in England number 2602204 VAT number GB 602861753